This post is related to SFxHH Hackathon that took place in Hamburg in December 2025 where the challenge was stark: 4 hours to build, ship, and pitch a working product.

The purpose was not to just demo a concept at the end but to prove that that impactful, human-centric AI tools could be built at speed.

The idea

I actually came into the hackathon with a completely different prepared idea. I planned to build a voice agent for accessible healthcare—one that could find available doctors and appointments near a user based on the problem they have.

After talking to different attendees looking for teams/team members, I got to hear about different challenges people were trying to solve. I was quiet impressed by the transformative idea of understanding accurate emotion in real-time that could improve customer support and help people understand how their customer mood is before starting a conversation with them.

As our multi-modals gets better and better over time, They dont just do voice to text anymore but are able to understand users pitch, tone, pace as well. These data can be extracted out of these multimodals easily and can be used to understand certain emotions based on acoustic cues.

The idea was simple: Instead of just transcribing text and understanding the mood, what if the system understood how the caller felt using acoustic cues in real-time—and provided a headstart to a custom support agent before they even start a conversation with them?

Rapid Prototyping with Google AI Studio

To validate our "Acoustic Behavioural Analysis" theory fast, we used Google AI Studio.

It was incredibly easy to prototype the initial logic and we had a working proof of concept under the AI studio playground very quickly. When we passed some audio samples to see if it could accurately pick up on nuanced cues like pitch changes and hesitation. It worked.

With the core logic validated, we took that code and started building on top of it. We had some hackathon credits from one of the sponsors VAPI which is a platform that provides interface to build Voice AI agents.

Detecting Emotions using VAPI Assistant

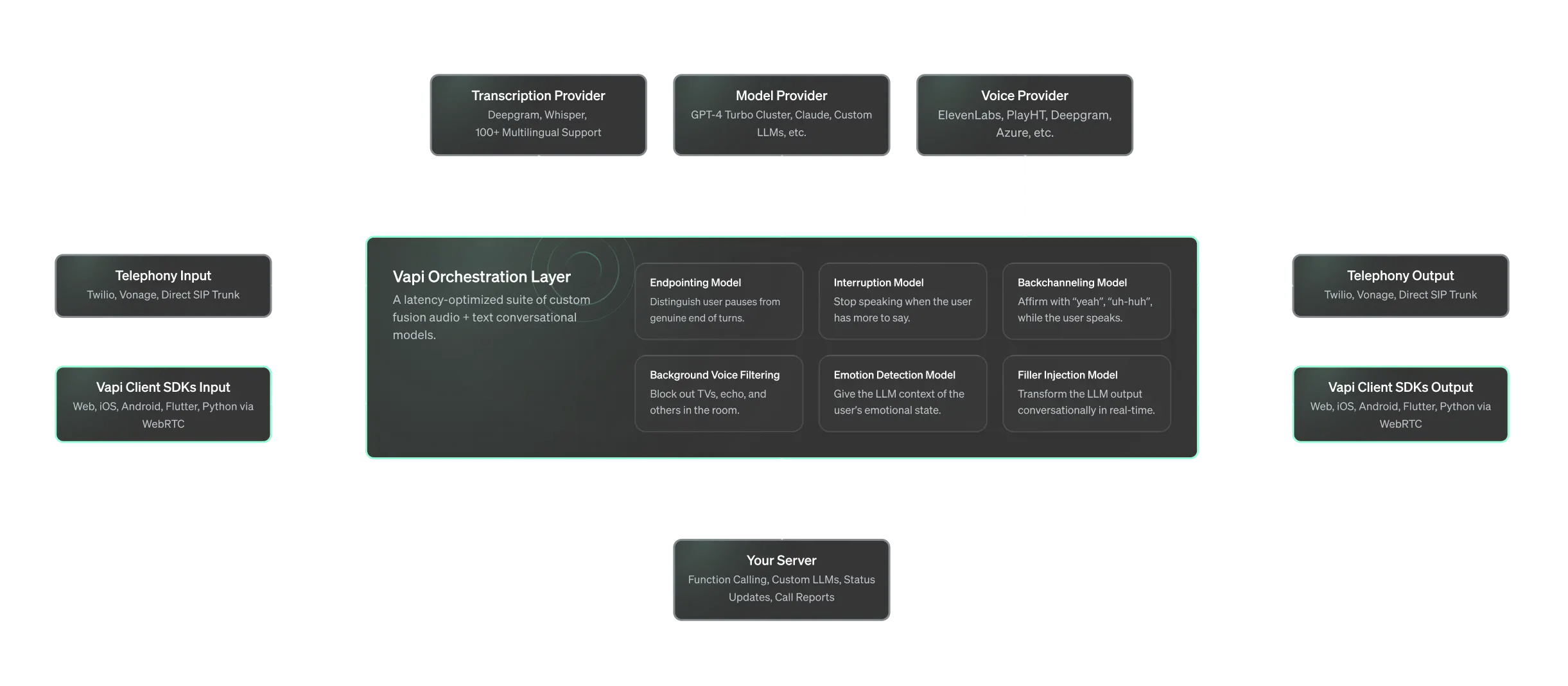

After going through the VAPI docs, we found out that VAPI supports amotion detection for their assistants took. Here is a representation of their orchestration layer

According to their docs, under Emotion Detection

Emotion detection – Detects user tone and passes emotion to the LLM.

How a person says something is just as important as what they’re saying. So we’ve trained a real-time audio model to extract the emotional inflection of the user’s statement. This emotional information is then fed into the LLM, so knows to behave differently if the user is angry, annoyed, or confused.

We used Vapi as our core engine for voice processing and we were able to acheive incredible results. The implementaion was also quite straight forward as context7 provides a documentation which our AI assistant could use to create a working prototype using Vapi Assistant.

How system works:

Situation:

A user faces an issue, Calls support. An AI/Robot caller usually takes the initial screening to get user details etc and asks them to describe the issue they are facing

The user says:

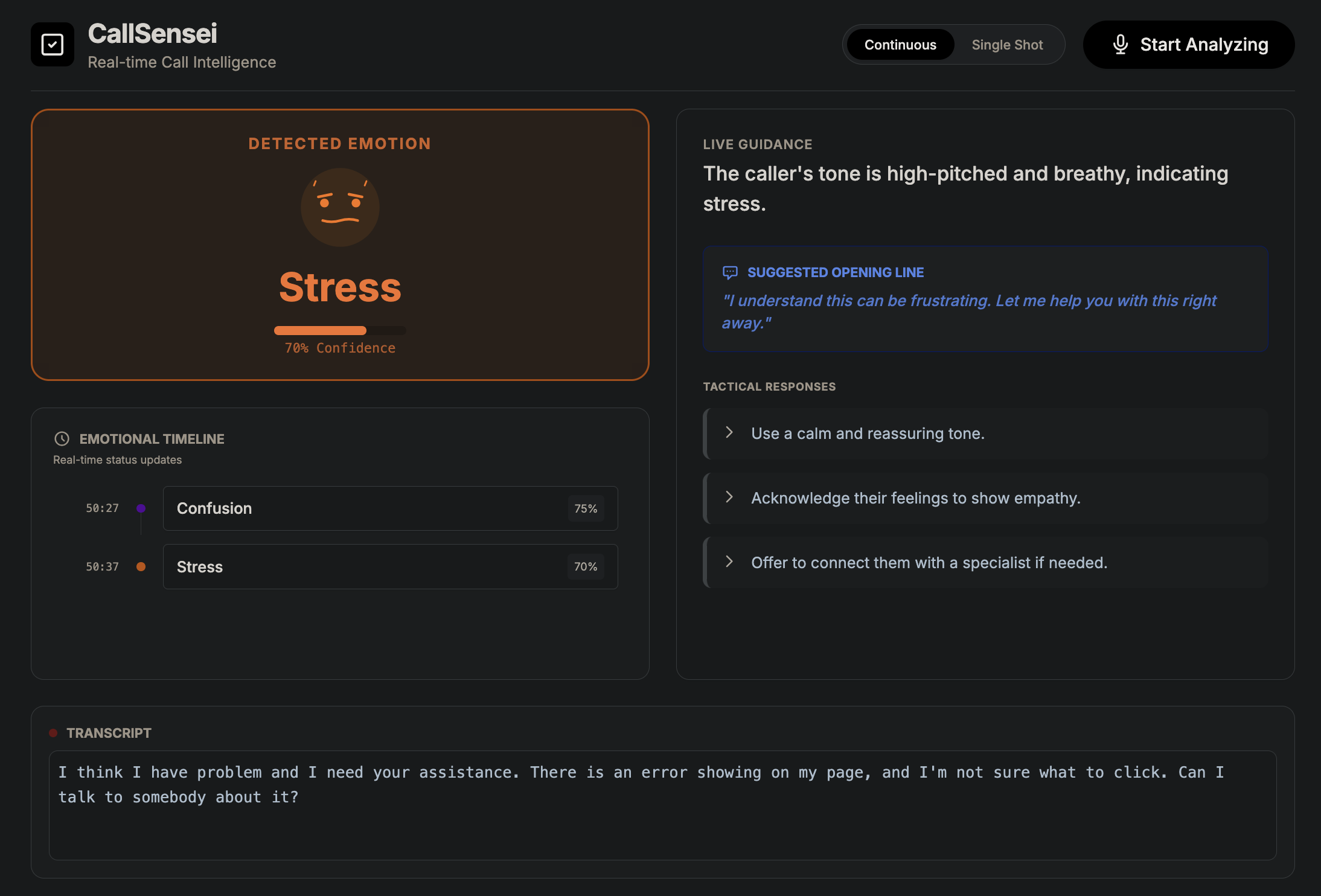

I think I have a problem and I need your assistance. Um, there's an error showing on my page and I'm not sure what to click. Can I talk to somebody about this?

Analysis:

The system tries to understand user acoustic tone and based on that detects the user's emotions in real-time

Result:

The support agent is presented with a dashboard that shows the user's emotions in real-time, problem the user is facing and steps to help the user to resolve the issue in best possible way.

Value:

- Smoother conversations: De-escalating anger before it boils over.

- Early Preparation: Dashboard provides help docs if needed for the issue the user is facing beased on the described proble.

- Faster resolutions: Support agent know exactly what the caller needs and how to handle the issue and resolve it in best possible way.

MVP

The code for the MVP version that we demoed at the hackathoncan be found on Github - CallSensei.